This is an old revision of the document!

Table of Contents

Introduction

Many engineering problems initiate demands for large-scale computing, which must be feasible from the view of both time and available resources. The parallel processing allows not only obtaining results in acceptable time by significantly speeding up the analysis, but also performing large and complex analyses, which often do not fit into a single, even well equipped, machine with one processor unit (regardless of achieved speedup).

The design of parallel algorithms requires the partitioning of the problem into a set of tasks, the number of which is greater than or equal to the number of available processors. The partitioning of the problem can be fixed at runtime (static load balancing) or can change during the solution (dynamic load balancing).

Parallelization strategy

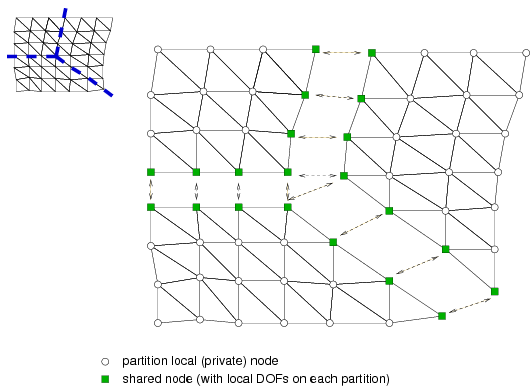

The adopted parallelization strategy in OOFEM is based on the mesh partitioning. Generally, two dual partitioning concepts for the parallelization of finite element codes exist. With respect to the character of a cut dividing the problem mesh into partitions, one can distinguish between node-cut and element-cut concepts.

In the presented study, the node-cut approach will be considered. This approach is based on a unique assignment of individual elements to partitions. A node is then either assigned to a partition (local node), if it is surrounded exclusively by elements assigned to that partition, or shared by several partitions (shared node), if it is incident to elements owned by different partitions

To be optimally load-balanced, the partitioning should take into account a number of factors, namely

- The computational work on each sub-domain should be balanced, so that no processor will be waiting for others to complete. The computational work is typically related to elements, and can be, for example, computed as a sum of individual element computational weights, which express the amount of numerical work (number of operations) associated with each element compared to the selected reference element. The overall computational work should be distributed among individual processors according to their relative performance.

- The interface between the partitions should be minimal, in order to reduce the communication requirements among partitions. When assembling the governing equations, the contribution from neighboring nodes/elements is often needed and the accumulation of remote contributions will incur communication. The cost of accessing remote memory is far higher than that of accessing local memory, therefore it is important to minimize the communication cost.

Communication Layer

The parallel distributed memory programming model is relying on message passing, a form of communication based on sending and receiving messages. OOFEM is based on Message Passing Interface (MPI). High-level communication services were developed on the top of the message passing library. On the lower level, the CommunicationBuffer class is defined, providing services for sending/receiving receiver and packing/unpacking services. Message packing/unpacking} to form a continuous data stream that can be send in a single message, avoids fine-grained communication, due to overhead connected with sending small messages instead of a single one (latency issues).

To facilitate the mutual data exchange between sub-domains, the ProcessCommunicatorBuffer class is introduced. It maintains its send and receive buffers (instances of the CommunicationBuffer class) that are used to communicate with a remote sub-domain. The separate send and receive buffers are necessary in order to fully exploit the advantages of non-blocking communication of inter-partition communication with mutual data exchange. Such exchange typically involves data related to certain entities only (for example, located on inter-partition boundaries) and is repeated several times for each solution step. This leads to the introduction of the ProcessCommunicator class, which maintains the communication rank of a remote partition, communication maps and an instance of the ProcessCommunicatorBuffer class. Communication maps, which can be thought of as lists of entities (nodes, elements) that participate in the communication, are established according to the mesh partitioning prior to the actual analysis, separately for the send and receive operations. The corresponding local send and remote receive (and vice versa) maps must be uniquely ordered to guarantee the correctness of packing and unpacking operations. In general, the communication maps vary dynamically during the analysis to reflect the potential repartitioning. The ProcessCommunicator class provides high-level services for packing and unpacking relevant data. A general implementation of these operations has been obtained using call-back procedures, which pack and unpack the data according to the communication maps using the elementary services provided by the CommunicationBuffer class.

Further reading

- B. Patzák and D. Rypl. Parallel adaptive finite element computations with dynamic load balancing. In B. H. V. Topping, L. F. Costa Neves, and R. C. Barros, editors, Proceedings of the Twelfth International Conference on Civil, Structural and Environmental Engineering Computing, Stirlingshire, United Kingdom, 2009. Civil-Comp Press. paper 114.

- B. Patzák and D. Rypl. A framework for parallel adaptive finite element computations with dynamic load balancing. In B. H. V. Topping, editor, CD-ROM Proceedings of the First International Conference on Parallel, Distributed and Grid Computing for Engineering, Pecs, Hungary, 2009. Civil-Comp Press. ISSN: 1759-3433, ISBN: 978-1-905088-29-4.

- B. Patzak,D. Rypl, and Z. Bittnar: Parallel explicit finite element dynamics with nonlocal constitutive models, Computers and Structures, 79 (26-28), 2287-2297, 2001.